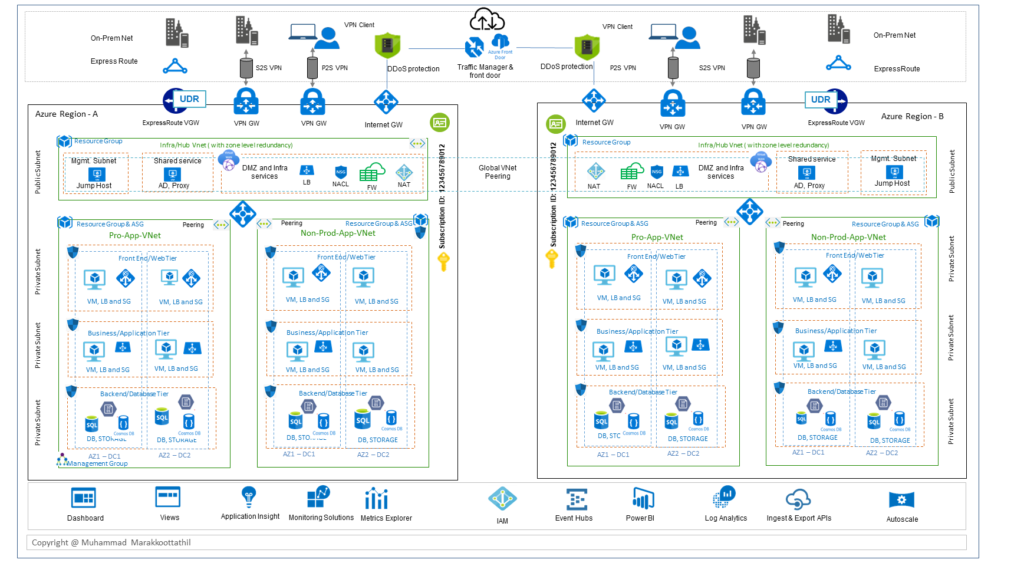

A Quick Overview of Azure IaaS Reference Architecture

Cloud services are increasingly at the heart of an organization’s digital business strategy. When it comes to a public cloud offering Azure is number two after AWS in terms of market share. In this blog, lets looks at “A Quick Overview of Azure IaaS Reference Architecture”

For an easy reference and for segregating different architectural functions the overall design of IaaS infrastructure is grouped into three key areas. Which are by the way are very similar to my previous blog on AWS.

As shown in the above diagram, the idea is to have various Azure features and capabilities are tailored to meet the security, network, and governance requirements. The reference architecture blocks should help with the following scenarios:

- Host multiple related workloads.

- Migrate workloads from an on-premises environment to Azure.

- Implement shared or centralized network, ADC, security, and access requirements across workloads.

- Segregate Production, DMZ, UAT, or Dev appropriately for a large enterprise.

Edge Block

This is the landing zone for the cloud services. This block primarily consist of the network, security and application delivery controllers, VPN etc. This is to route, provide load-balancing, load-sharing and securing the connectivity to the cloud.

Components of a cloud edge network connect your on-premises or physical datacenter networks, along with any internet connectivity. Incoming packets that come to the cloud edge block should flow through the security appliances ( Azure DNS, Azure Front Door- AFD, DDoS, Virtual WAN, Azure LB, Application gateway, etc) in the hub. This should happen, before reaching the back-end servers and services in the spokes. Examples include the NSG, UDR, Azure firewall, IDS, and IPS, before they leave the network. Internet-bound packets from the workloads should also flow through the security appliances in the perimeter network. This flow enables policy enforcement, inspection, and auditing.

Application Block

Workload components are where your actual applications and services reside. It’s where your application development teams spend most of their time.

Most public applications are web sites. Azure can run a web site via either an IaaS virtual machine or an Azure Web Apps site (PaaS). Azure Web Apps integrates with virtual networks to deploy web apps in a spoke network zone.

Another key consideration is the application HA and load sharing. For example, HDInsight supports deploying into a location-based virtual network. That can be deployed to a cluster in a spoke of the virtual datacenter.

When it comes to application infrastructure the Virtual networks are mainly anchor points for integrating the platform as a service (PaaS). Azure products like Azure Storage, Azure SQL, and other integrated public services that have public endpoints. With service endpoints and Azure Private Link, you can integrate your public services with your private network.

From the design perspective, the applications can group into their own spoke, or a flat network design. It’s also possible to implement complex multitier workloads. Using a specific subnet is one approach for doing multi-tier configurations. That is one for every tier or application, in the same virtual network.

Monitoring and Governance Block

Monitoring components provide visibility and alerting from all the other component types. All teams should have access to monitoring for the components and services they have access to. If you have a centralized help desk or operations teams, they require integrated access to the data provided by these components.

Azure offers different types of logging and monitoring services to track the behavior of Azure-hosted resources. Governance and control of workloads in Azure is based not just on collecting log data but also on the ability to trigger actions based on specific reported events.

Some of the key services in this includes, Identity and directory services, management group, subscription, resource group management, Azure Sentinel, Azure Monitor & Azure Network Watcher etc.

Highly availability and Redundancy

Customers that require high availability must protect the services through deployments of the same project in two or more implementations deployed to different regions. In addition to SLA concerns, several common scenarios benefit from running multiple virtual datacenters:

- Regional or global presence of your end users or partners.

- Disaster recovery requirements.

- A mechanism to divert traffic between datacenters for load or performance.

Synchronization and heartbeat monitoring of applications in different implementations require them to communicate over the network. Following are the different option for doing multiple implementations in different regions

- Hub-to-hub communication built into Azure Virtual WAN hubs across regions in the same Virtual WAN.

- Multiple ExpressRoute circuits connected via your corporate backbone, and your multiple VDC implementations connected to the ExpressRoute circuits.

- Site-to-site VPN connections between the hub zone of your VDC implementations in each Azure region.

Both Azure Traffic Manager and Azure Front Door periodically check the service health of listening endpoints in different VDC implementations. And, if those endpoints fail, route automatically to the next closest VDC. Traffic Manager uses real-time user measurements and DNS to route users to the closest (or next closest during failure). Azure Front Door is a reverse proxy at over 100 Microsoft backbone edge sites, using anycast to route users to the closest listening endpoint

Summary

The architecture presented is primarily based on Hub and spoke network topologies (using either virtual network peering/Virtual WAN hubs). Hub hosts common shared services, and the spoke hosting the specific applications and workloads. It could also match the structure of company roles, where different departments such as Central IT, DevOps, and Operations and Maintenance all work together while performing their specific roles.

For more related blogs on the topic – please refer the section “Cloud Computing & services section“ happy learning

Hi,

look great diagram. Can you share full HD of diagram or not ?

Hi, nice architecture diagram.

Hi Joe,

The right to use and print requires payment towards networkbachelor team. Please note that this would be used for funding the development of quality blogs and can be treated as a donation.

regards

Muhammad Marakkoottathil

pass